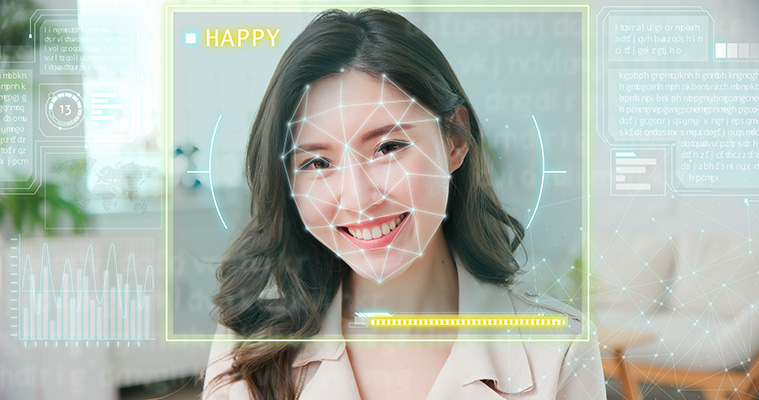

Face Emotion Detection

Automatically detect faces and the emotions expressed in images

A face detection model can be trained to locate human faces within an image. Any faces detected can also be analysed to attempt to determine human emotions such as happy, sad, angry, neutral etc. The following demonstration uses Amazon's Rekognition service to detect and analyse human faces within an image. Concerns have been raised over the ethics of whether or not you can determine a human's mental state from their facial expression. Furthermore, Microsoft have announced that they will be removing this functionality at the end of June 2023 from their Azure Rekognition service.

Instructions

- Select a sample image below, upload one of your own or take a picture using your webcam.

- Once the image has been analysed the results will be shown below. Any faces detected will be outlined with a box, and their facial emotion expressed will be displayed along with the model confidence as a percentage (%).

More information about this demo

Detection of human faces can be used as an automatic way to count humans in a room or the number of people passing a certain area over time for infrastructure assessments. The emotions expressed in the detected faces could be used to track peoples emotions over time in a wide variety of settings, provided the necessary legal end ethical issues were addressed.

All images analysed using this demo are stored for 24 hours and then automatically deleted using Microsoft's Azure data lifecycle management.